Graphical Models-Bayesian Belief Networks, Markov Random Fields(MRFs)

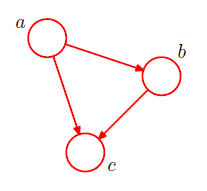

Probabilities play a central role in modern pattern recognition.it highly advantageous to augment the analysis using diagrammatic representations of probability distributions, called probabilistic graphical models. These offer several useful properties 1. They provide a simple way to visualize the structure of a probabilistic model and can be used to design and motivate new models. 2. Insights into the properties of the model, including conditional independence properties, can be obtained by inspection of the graph. 3. Complex computations, required to perform inference and learning in sophisticated models, can be expressed in terms of graphical manipulations, in which underlying mathematical expressions are carried along implicitly. A graph comprises nodes (also called vertices) connected by links (also known as edges or arcs). In a probabilistic graphical model, each node represents a random variable (or group of random variables), and the links express probabilistic relationship...