Advanced Topics in Machine Learning - CST396 KTU CS Sixth Semester Honours Notes - Dr Binu V P 9847390760

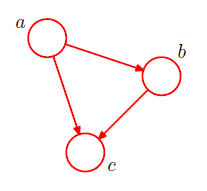

About Me Syllabus Module-1 (Supervised Learning) Overview of Machine Learning Supervised Learning,Regression Naive Bayes Classifier Decision Trees-ID3 Discriminative and Generative Learning Algorithms Module-2 ( Unsupervised Learning) Similarity Measures Clustering -K Means, EM Clustering Hierarchical Clustering K-Medoids Clustering Module -3 (Practical aspects in machine learning) Classification Performance Measures Cross Validation, Bias variance, Bagging ,Boosting, Adaboost Module -4 (Statistical Learning Theory) PAC( Probably Approximately Correct) learning Vapnik-Chervonenkis(VC) dimension. Module -5 (Advanced Machine Learning Topics) Graphical Models-Bayesian Belief Networks, Markov Random Fields(MRFs) Inference in Graphical Models-Inference on Chain , Trees and factor Graphs Sampling Methods Auto Encoders and Variational Auto Encoders (VAE) GAN